Azure Multi AZ Gateway Deployment

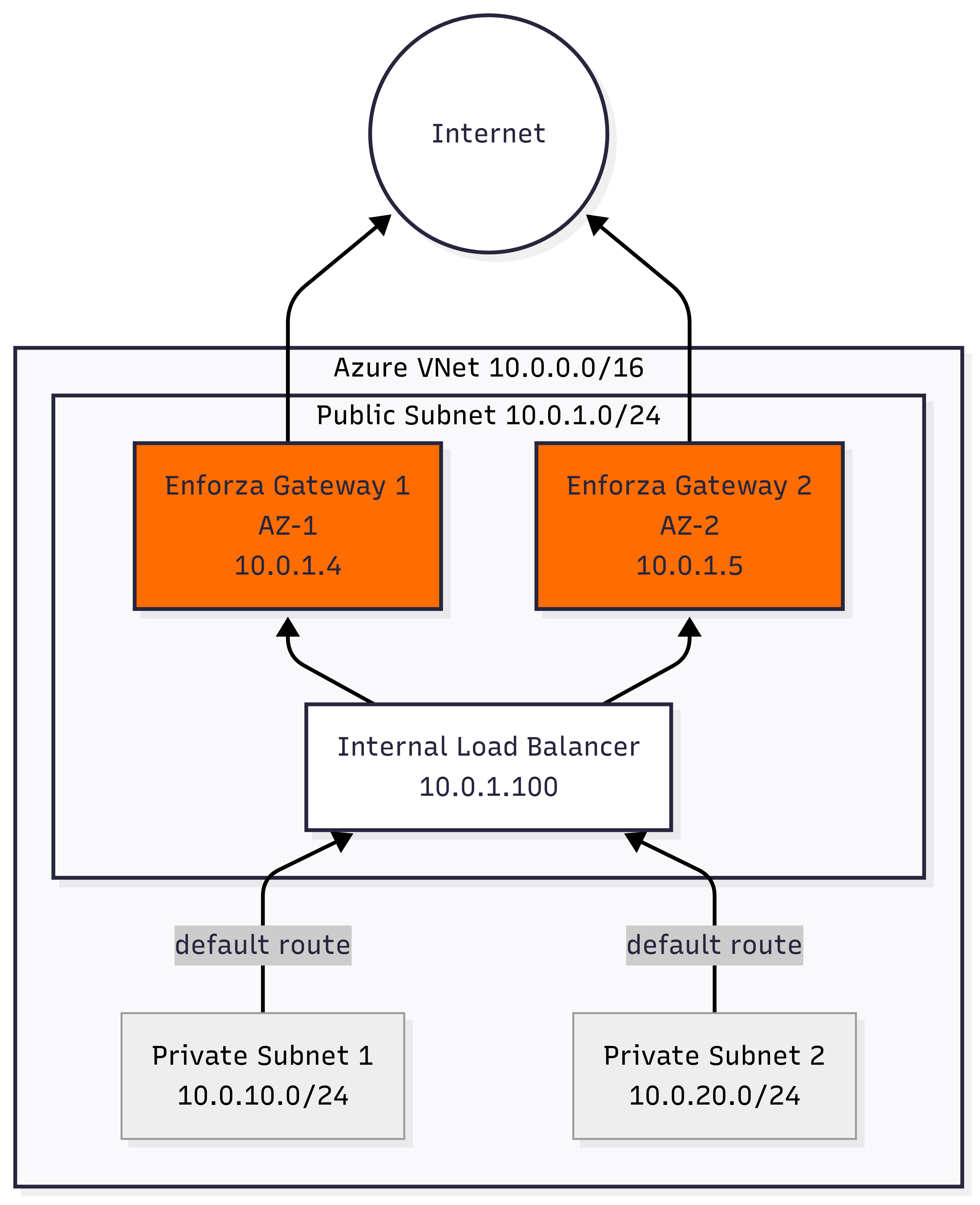

This Terraform configuration creates a highly available Azure networking setup with two Enforza gateway servers deployed across different Availability Zones, load-balanced for redundancy and high availability.

This architecture is designed for outgoing/egress traffic only, where you are not publishing applications from the internet to internal servers (i.e., no requirement for DNAT/port forwarding).

Architecture

Components Created

Network Infrastructure

- VNet: 10.0.0.0/16 address space

- Public Subnet: 10.0.1.0/24 (contains both Enforza gateways and load balancer)

- Private Subnet 1: 10.0.10.0/24 (routes via load balancer)

- Private Subnet 2: 10.0.20.0/24 (routes via load balancer)

HA Enforza Gateway Setup

- Gateway 1: enforza-ha-gateway-1 (10.0.1.4) in AZ-1

- Gateway 2: enforza-ha-gateway-2 (10.0.1.5) in AZ-2

- VM Size: Standard_B1s with Ubuntu 22.04 LTS (configured as Enforza Gateway)

- Public IPs: Each gateway has its own public IP for management

- Security: NSG with "permit any any" rules

- Features: Enforza agent automatically installed on both gateways

High Availability Components

- Internal Load Balancer: 10.0.1.100 (distributes traffic between gateways)

- Health Probes: TCP port 22 health checks

- Backend Pool: Both gateways in load balancer backend

- Availability Zones: Gateways distributed across first two AZs in region

Route Tables

- Private Subnets: Default route (0.0.0.0/0) → Load Balancer (10.0.1.100)

- Load Balancer: Distributes traffic to healthy gateways

- Internet Access: Private subnet VMs route through HA gateway cluster

Quick Start

-

Clone the repository and navigate to the Azure Multi-AZ directory:

git clone https://github.com/enforza/azure-terraform.git

cd azure-terraform/ha-multi-az -

Authenticate to Azure:

az login -

Configure variables:

cp terraform.tfvars.example terraform.tfvars

# Edit terraform.tfvars with:

# - Your subscription ID

# - Your Enforza company ID

# - Authentication method (password or SSH key) -

Deploy:

terraform init

terraform plan

terraform apply -

Manage via the Enforza Cloud Controller:

The device(s) will have automatically registered itself in the portal. Login, and under devices, you should see a newly provisioned device with no name. You can see which is which by looking at the details and seeing which Availability Zone the device is provisioned in.

- Add Firewall rule to allow Azure Load Balancer Health Checks

In the Enforza Cloud Controller, you will need to permit a special rule in the MANAGEMENT RULES that allows the Azure platform to perform Load Balancer Health checks - Azure uses a "special" source IP to perform this (168.63.129.16) - and needs to be permitted or the Azure Load Balancer will show as unhealthy and not forward packets to the Enforza Gateways.

- SRC IP = 168.63.129.16

- DST IP = Firewall/Gateway

- PROT = TCP

- PORT = 22

- LOG = DISABLED

- DESCRIPTION = AZURE LB HEALTHCHECKS

-

Test HA gateways:

# SSH to gateway 1

ssh azureuser@<gateway_1_public_ip>

# SSH to gateway 2

ssh azureuser@<gateway_2_public_ip>

# Check load balancer health

az network lb show --resource-group enforza-ha-multi-az-gateway --name enforza-ha-gateway-lb

Authentication Options

Choose ONE method in terraform.tfvars:

Option 1: Password Authentication

admin_password = "YourSecurePassword123!"

Option 2: SSH Key Authentication (Recommended)

ssh_public_key = "ssh-rsa AAAAB3NzaC1yc2E... your-key-here"

Enforza Company ID

enforza_companyId = "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx"

You can get your enforza_companyId from the Enforza Cloud Controller portal here and navigating to "Profile" and copy/pasting Company ID.

High Availability Features

Automatic Failover

- Load Balancer Health Probes: Continuously monitor gateway health

- Traffic Distribution: Healthy gateways automatically receive traffic

- Zone Redundancy: Gateways in separate AZs for zone-level failure protection

Scaling and Resilience

- Active-Active: Both gateways handle traffic simultaneously

- No Single Point of Failure: Load balancer distributes across healthy nodes

- Zone Isolation: AZ-1 failure doesn't affect AZ-2 gateway

Cost Estimate

Monthly cost: ~$60-80 (UK South region)

- 2x Standard_B1s VMs: ~$30-40/month

- Storage (Premium SSD): ~$10/month

- 2x Public IPs: ~$6/month

- Load Balancer Standard: ~$5/month

- Networking: ~$5-15/month

Still significantly cheaper than Azure Firewall (~$900/month)

Benefits Over Single Gateway

✅ High Availability: No single point of failure

✅ Zone Redundancy: Protection against AZ-level outages

✅ Load Distribution: Better performance under load

✅ Automatic Failover: Seamless traffic redirection

✅ Scalable: Easy to add more gateways

✅ Cost Effective: Still ~90% cheaper than Azure Firewall

Troubleshooting

Gateway HA Issues

- Check AZ availability:

az vm list-skus --location uksouth --zone-details - Verify load balancer health: All backend pool members should be healthy

- Test individual gateways: SSH to each gateway and verify Enforza agent

Load Balancer Issues

- Check you've added the MANAGEMENT RULE for allowing the health check probes: see above Step 5!

- Check backend pool:

az network lb address-pool show - Verify health probes: Ensure gateways respond on probe port

- Route table verification: Confirm private subnets route to LB IP

Private VMs can't reach internet?

- Verify route table association: Private subnets → Load Balancer

- Check gateway health: Both gateways should be healthy in LB

- Enforza agent status: Verify agents are running on both gateways

Usage Examples

Deploy Private VMs (Optional)

After creating the gateway, you can deploy VMs in the private subnets. They will automatically route internet traffic through the gateway:

# Example: Add to main.tf to create private VM

resource "azurerm_network_interface" "private_vm" {

name = "private-vm-nic"

location = azurerm_resource_group.main.location

resource_group_name = azurerm_resource_group.main.name

ip_configuration {

name = "internal"

subnet_id = azurerm_subnet.private_1.id # or private_2

private_ip_address_allocation = "Dynamic"

}

}

Monitor Traffic

SSH to the gateway and monitor routing:

# Watch traffic flowing through gateway

sudo tcpdump -i any -n host 10.0.10.0/24 or host 10.0.20.0/24

# Check routing table

ip route show

Test Connectivity from Private VMs

# From a private subnet VM (once deployed)

curl ifconfig.me # Should show gateway's public IP

ping 8.8.8.8 # Should work via gateway

Customization

Change VM Size

vm_size = "Standard_B2s" # More powerful

vm_size = "Standard_B1ls" # Even cheaper (burstable)

Modify Network Ranges

vnet_address_space = ["192.168.0.0/16"]

public_subnet_prefix = "192.168.1.0/24"

private_subnet_1_prefix = "192.168.10.0/24"

private_subnet_2_prefix = "192.168.20.0/24"

Security Note

This configuration uses "permit any any" NSG rules for simplicity. In production:

- Restrict SSH to specific source IPs

- Implement more granular firewall rules

- Consider Azure Bastion for secure access

- Use Key Vault for credentials

Clean Up

terraform destroy

This will remove all created resources and stop billing.